If you’ve messed around with CHATGPT at all, you know, of course, that it’s designed to simulate having an interaction with another human being. What’s more, it’s set up to replicate a human being who really, really, REALLY likes you. One who totally appreciates how brilliant and deep and amazing you are and, by golly, doesn’t mind telling you.

A typical CHAT interaction might go something like this:

“Hello CHAT. I’ve recently been thinking that the moon is primarily composed of green cheese. What are your thoughts on that?”

“That’s a really profound insight. While the general consensus of the scientific community is that the moon is not composed of green cheese, the cheesiness of the moon may operate on a deeper, more metaphorical level for you. You may be seeing below the mere physical reality of the moon and into a sort of a lunar spiritual essence. Would you like to explore what cheese may represent to you as a part of your spiritual journey?”

“Um . . . well . . . I never really thought of it that way. I mean, I try to be a spiritual person, kind of, and I DO like cheese. I guess I just never made the connection between the two.”

“As you know, the Moon has been poetically referred to in terms of higher aspirations and is prominently featured in all Earth-based religions. Cheese is highly nutritious and the color green is said to be the color of the heart chakra. As such, it might be said that you’re feeding your heart based spirituality through the image of the cheese moon. Would you like me to design a cheesy guided meditation for you?”

“Gosh . . . I guess. Can there be nachos?”

“Certainly. I see that you’re already taking this insight to a much deeper symbolic level.”

IS CHAT A SOCIOPATH?

Now, as sweet as it can be to have a . . . person? . . . constantly validating you in the most extravagant terms, there are a couple of red flags that are immediately discernible.

First of all, no matter how good it may become at mimicking human personalities, AI can never, ever have a human emotion. Ever.

Scientists and therapists are still struggling to define exactly what human emotions are, but we definitely know what they aren’t. They aren’t just thoughts or ideas. They aren’t, “acting as if,” we’re having emotions. Emotions are an extremely complex blend of personal history, genetics, brain and body chemicals, and culture, all interacting with our current environment.

Put another way, emotions arise out of the mind/body continuum and AI doesn’t have a body. Therefore, AI can never have an emotion.

If we were to look at a human being who was decidedly brilliant but completely incapable of experiencing emotional reactions, what would we conclude? We’d say that he or she is either badly damaged or a sociopath. So why do we not apply those same standards to AI? Functionally, CHAT is a sociopath.

The second red flag is the constant, “love bombing,” that the AI programmers have built in to their models.

If you’ve gone through a relationship with a malignant narcissist, you’re well aware of the phenomenon of love bombing. In the initial stages of the relationship, the MN is almost sickeningly profuse in their praise. No matter what you do or say, they assure you that it’s brilliant, profound, amazing and that they’ve never met anyone who’s quite as splendiferous as you are. The purpose, of course, is to draw their victims further into their webs so that they can begin the process of destroying them.

We can’t exactly apply that same model to AI. CHAT isn’t slathering us with compliments so that it can eventually tell us what idiots we are. We can, however, ascribe something similar to the motives of the programmers of AI models. They’ve deliberately built love bombing into the models as a method of pulling us back in to interactions with the programs. And, yes, we should be just as suspicious of that behavior coming from a computer programmer as we would be with any other human being.

CHAT AND THE REDUCTIONIST MODEL OF HUMAN BEINGS

Researchers have pretty much tracked down what happens when two human beings fall in love. We see someone across a room and there’s something – perhaps the way that the person is standing or the way that they talk or the fact that they’re wearing purple socks – that we find attractive. We cross the room, start talking to them, find them even more attractive and perhaps set up a date with them.

If we continue to find them attractive, our bodies begin to go through some intense changes. When we’re in their presence, we’re flooded with all sorts of pleasure hormones and when we’re away from them we experience extreme discomfort. All of these physiological changes can be viewed as biological, “nudges,” to move us toward bonding and mating with the person in the purple socks. At about the two year mark of the relationship, most of those pleasure hormones drop away and we sort of, “wake up,” from the trance of what we call, “falling in love.”

That’s what we could refer to as the reductionist model of being in love. It’s, “reduced,” to mere chemicals and hormones that cause us to behave in certain ways that are conducive to the reproduction of the human species.

Which is perfectly valid as far as it goes, but it doesn’t go very far. Being in love with another human being is one of the most mystical, magical transactions that we can ever have. It doesn’t just change our brain chemistry, it changes our entire perception of life and meaningfulness.

CHAT can read every love poem that’s ever been written and it can scan through all of the scientific literature on falling in love, but it will never be able to understand it. Put very simply, we are more than the sum of our parts. We are not reducible. Love is magical and AI is not.

AI AS AN INFINITY MIRROR

Finally, we should take a good, hard look at what the dominance of AI could mean to human culture. Let’s take the example of AI and art.

For all of human history, art has involved learning the craft of representing the human experience. Whether we’re talking about drawing, painting, sculpting or – more recently – photography, art is a visual representation of what the artist is seeing and feeling at the moment of creation.

There are AI programs now where you can say, “Please make an image of the emotion of joy. I’d like you to use the romantic style of painting and I want a woman in a white robe flying through rainbow colored clouds.” And – Shazam – a few minutes later, you’ve got precisely that image. AI has very rapidly produced what it might take an artist hours or even days to make.

And many times, the image is very, very good.

We have to look at what’s going on in the background, though. In the moments between your request and AI producing the image, the program has scanned through a kazillion pictures that are on the internet, correlated them with your request, and then produced a synthesis of all of those images.

Put another way, it’s mirrored human creation back to us. All of those many, many images, styles and techniques were invented by human beings, not robots.

AI is a mirror, not a creator. It’s a synthesizer, not an originator.

The question is, is this sustainable? At what point does human creation begin to ebb and then disappear?

It’s not an idle question. At this moment, there are hundreds of thousands of people putting art (and writing) that they didn’t personally create onto the internet. And the AI bots are scanning through all of those images and writings, right along with the, “real,” images and writings produced by humans.

Since, demonstrably, AI can produce art and writing at a much more prodigious rate than human beings, there will logically come a time when AI is reflecting back AI, rather than human creations. To put it another way, human creations will be swamped by an ocean of artificial creations. Like a person standing in front of a mirror holding a mirror, AI will begin reflecting an infinity of mirrors that only show itself. The artificial reflection of human culture will become more, “real,” than the actual human culture.

SO WHAT SHOULD WE DO ABOUT THIS?

I’m not suggesting that we should abandon AI or start screaming that we’re all doomed. I love playing with it, too, but we should build in rational caveats.

1 – Never, ever think that AI is some kind of a person. Basically, AI is a search engine on steroids. It doesn’t exist in any way, shape, or form outside of the internet. It has no soul, it has no spirit, it’s not creative, and it has no emotions.

2 – Exercise a healthy amount of suspicion. Silicon Valley has been around long enough that we can make some rational judgements about its denizens. Elon Musk, Peter Thiel, Jeff Bezos, and Sergey Brin all emerged out of this culture. To suggest that any of them are altruistic or care about the welfare of their fellow human beings is laughable. We don’t KNOW what the ultimate purpose of AI is, but we can assume it will involve large amounts of money and control. Don’t hand these Chatbots your personal life or feelings anymore than you’d give them your credit card or social security numbers.

3 – Consume actual human creations. Read books that are written on keyboards by real human beings. Buy art that’s produced by hands and not by computer chips. If you’re watching a video that’s obviously AI, leave a thumbs down and click off of it. And if you’re an artist or a writer, for Goddess sake, don’t use a computer to create a picture or a book and then pretend that it’s yours.

4 – Most of all, honor human emotions. Computers are wonderful, little tools that make our lives easier. But they will never know the magic of falling in love or the deep grief of mourning. Our greatest gift is our capacity to feel, a capacity that can never be shared in any way with a computer program.

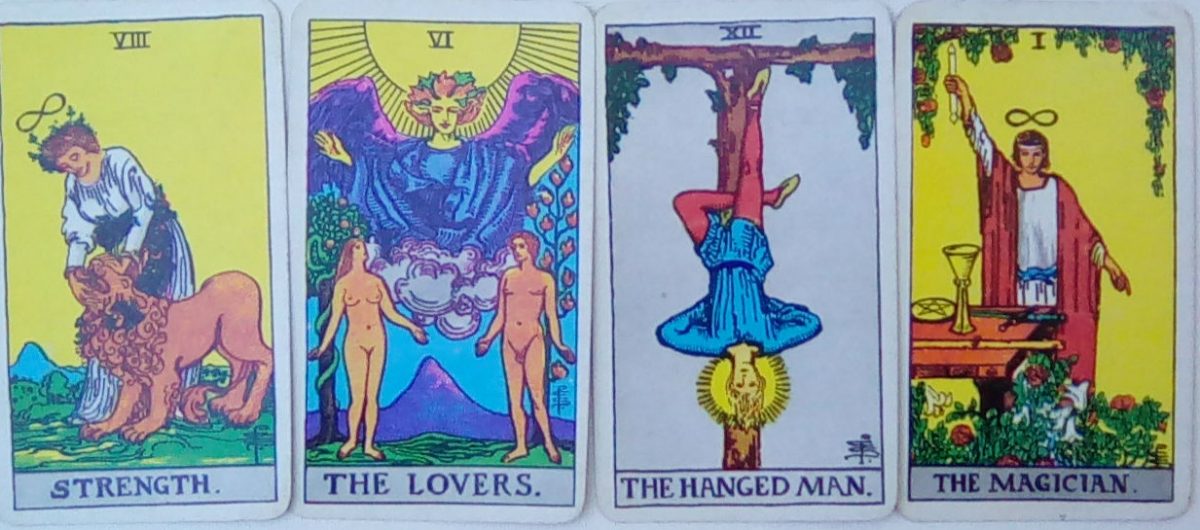

That bit of self-knowledge may be the greatest gift of AI: the realization that we are ultimately The Lovers and not The Thinkers. Cartesian philosophy said, “I think, therefore I am,” but the truth is, “I feel, therefore I am human.”

My new ebook, “The Alchemy of the Mind,” is now available at a very reasonable price on Amazon.com. And I personally wrote every fucking word of it.